Welcome to MCV-Intention Dataset

Industry 5.0 reforms the manufacturing paradigm from technology-driven to value-driven mode, where the humans play a vital role in manufacturing scenario. Under this background, human-robot collabration is emerging and attracts more and more attention. This will require a seamless collboration between human and robots in real time. Hence, the robot should deeply understand human assembly intention accurately with assembly scene data, and further provide assistance.

Hence, it is necessary to construct a dataset containing the operator assembly process so that the robot can make full use of the assembly scene knowledge to identify the operator assembly intention, and provice corresponding assistance. On the one hand, the cognitive burden of human operator can be decrsaed and the quality of product will be increased!

MCV-Intention is a dataset containing multi modal (RGB, depth, skeleton, optical flow, IR, masked operator) and a cross view of right above. An example of HAIRD as shown in the following gif figure. Here thanks Mr. JiaCheng Li for helping make demonstration.

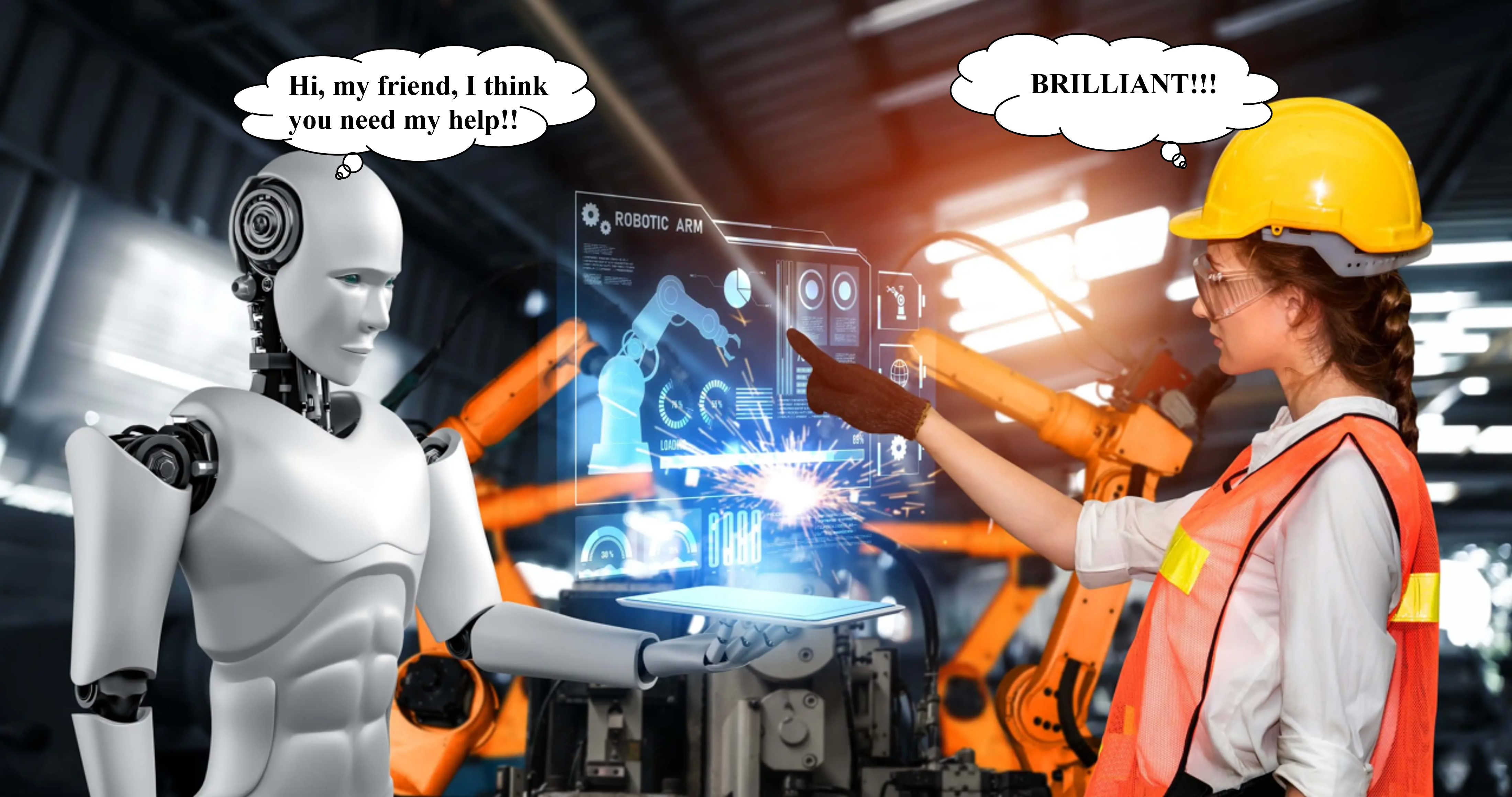

The dataset collection hardware system consists of two depth cameras (camera_front and camera_Up), an assembly, robot, human operator, and server. Camera_front is used to collect the scene data right ahead with RGB modal. Other modal (depth, skeleton, optical flow, IR, masked operator) are from camera_front. Camera_up is responsible for supplementing the scene data with another view, where the assembly parts, operator's hand can be observed directly.

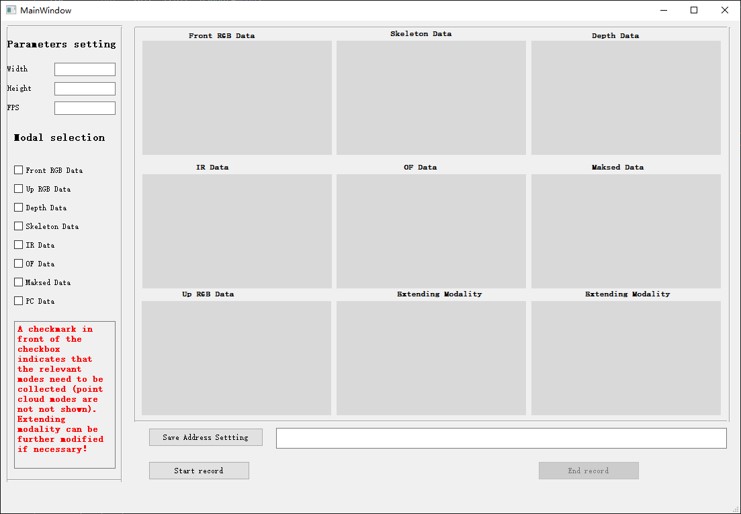

The software is developed by PySide2, opencv, and corresponding SDK of cameras (here is Orbbec and Intel RealSense D345i). This software consists of four modules, namely parameters setting, modal selection, save path selection, and data display. The source code can be found in Author's github Repository

Parameters settings module: set key parameters, namely height, width, data collection frame rate (fps).

Modal selection module: select the modal and views you want to collect and save by check the box.

Save path selection module: select the save path you want to save collected modal and views.

Data display module: display all modal and views for user.

Data construction rules can be found folllowing PDF documents.

The assembly is a satellite, which is modeled by open source software FreeCad software. The model view and explosive view can be found as follows.

The assembly is a satellite, which is modeled by open source software FreeCad software. The model view and explosive view can be found as follows.

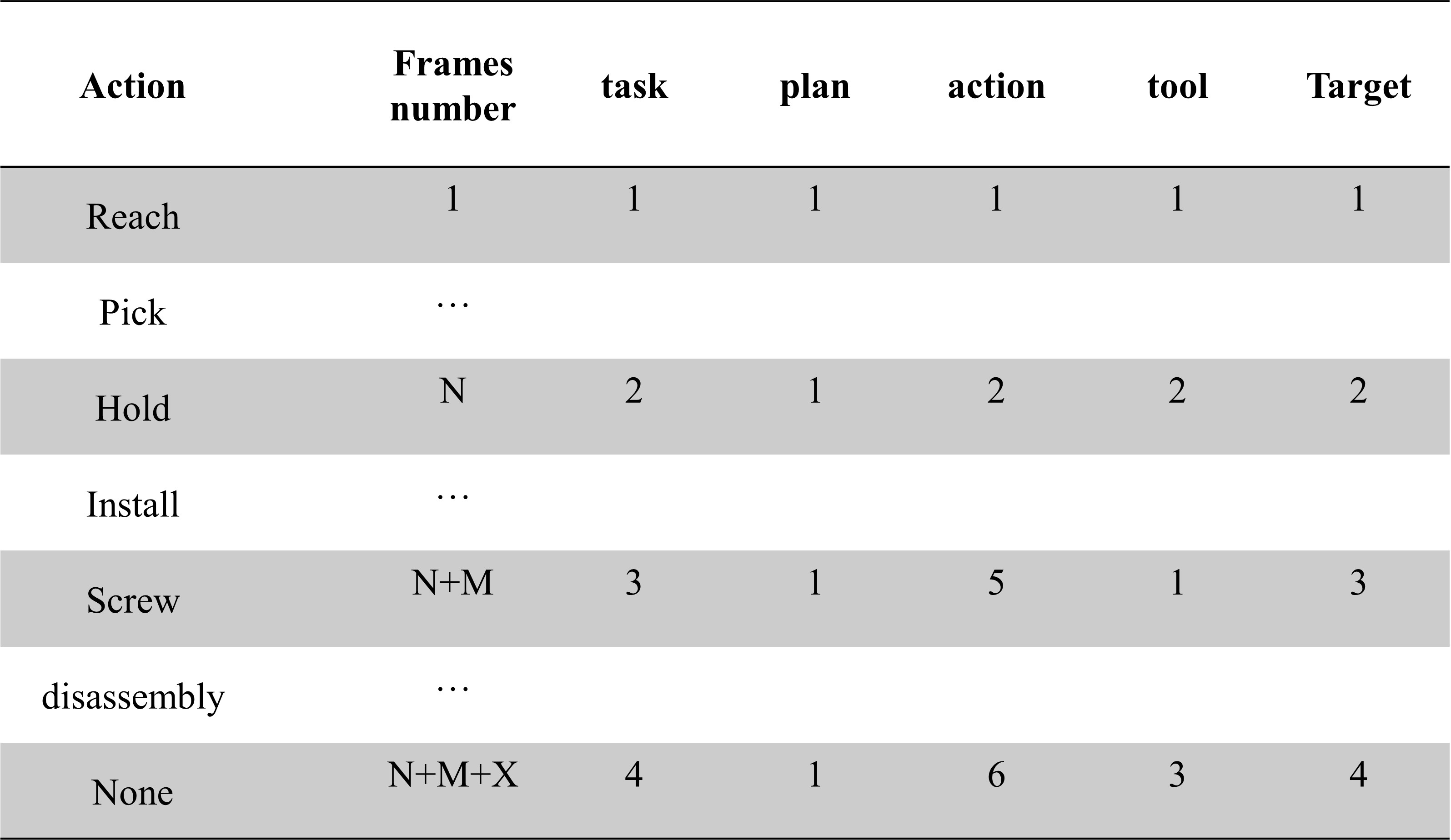

Here is the example about dataset annotations

[1] Leng, Jiewu, et al. "Industry 5.0: Prospect and retrospect." Journal of Manufacturing Systems 65 (2022): 279-295.

[2] Huang, Sihan, et al. "Industry 5.0 and Society 5.0—Comparison, complementation and co-evolution." Journal of manufacturing systems 64 (2022): 424-428.

[3] Zheng, Hao, Regina Lee, and Yuqian Lu. "HA-ViD: A Human Assembly Video Dataset for Comprehensive Assembly Knowledge Understanding." Advances in Neural Information Processing Systems 36 (2024).

[4] Cicirelli, Grazia, et al. "The HA4M dataset: Multi-Modal Monitoring of an assembly task for Human Action recognition in Manufacturing." Scientific Data 9.1 (2022): 745.

Please fill out the form below to request access to the MCV-Intention dataset: